You know what? The way writers work is about to shift again—and fast. Not in a sci-fi way. In a practical, Tuesday-afternoon way. Keyboards won’t disappear, and neither will bylines. But research, first drafts, editing, and even how readers reach your work are changing. Let’s stick to facts, name names, and keep this grounded in what’s actually happening.

When researchers gave professionals an AI assistant for mid-level writing tasks, people worked faster and produced better drafts. One randomized experiment found time to complete tasks fell about 40% while quality rose 18%. Another field study with consultants saw 25% faster completion, more tasks finished, and a notable bump in quality—especially for less-experienced folks.

But tools aren’t magic. The Harvard/BCG work calls it a “jagged frontier”: on tasks inside the tool’s strengths, results shine; outside them, quality can drop. Translation: let AI draft boilerplate, summaries, or “first pass” copy, but keep humans steering nuance, judgment, and checks.

And yes, hallucinations remain a thing. Google’s AI Overviews had some very public misfires—remember the glue-in-pizza saga? Google says it narrowed triggers and added guardrails after outlandish answers went viral. Useful lesson for writers: AI can sprint; you still need to steer.

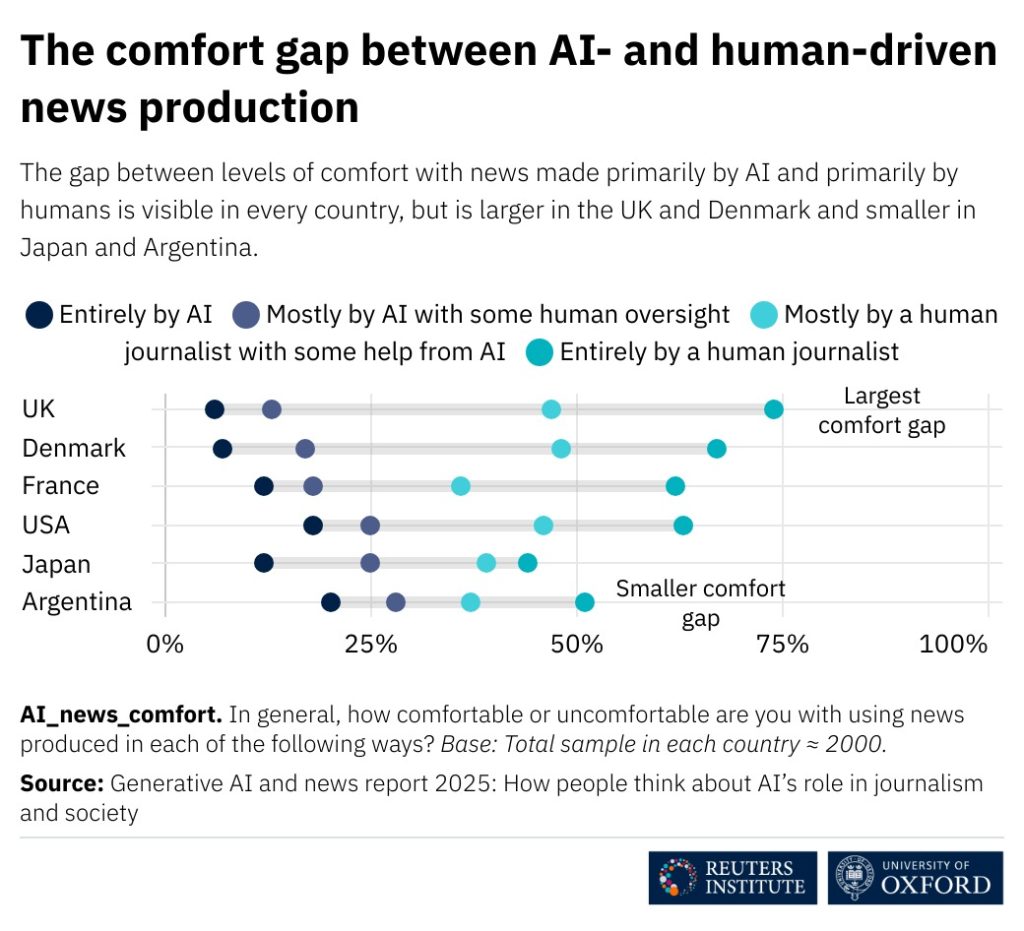

Do readers even want AI-written content?

Short answer: not much—unless people know a human is still in charge. The Reuters Institute Digital News Report 2025 finds that audiences across countries are wary of news written mostly by AI. Comfort rises when AI is “behind the scenes,” and tops out when humans lead with AI assisting. One summary: “Just 12% are comfortable with fully AI-generated news,” while trust is far higher for human-led work. That’s a big social signal for anyone publishing under their own name.

The same report, and follow-ups, note a growing share of people see AI-generated answers in search each week. People like speed; they don’t like mystery. Labeling and clarity help—more on that soon.

Google’s quality crackdown and the rise of “zero-click”

In March 2024, Google rolled out a core update and spam update aimed at reducing low-quality, unoriginal content. It wasn’t an anti-AI crusade; it was an anti-junk push. Google’s guidance is blunt: helpful, people-first content wins, regardless of how you draft it. That’s the line to write toward.

Now the painful bit. Since AI Overviews arrived, zero-click searches—where users get what they need without visiting a site—have grown. Similarweb data (widely cited across the industry) shows zero-clicks rising from 56% to 69% within a year. Trade groups and publishers report real traffic dents, and some have gone to court. Penske Media (Rolling Stone, Billboard, Variety) sued Google over AI Overviews in September 2025. The traffic pie is being sliced differently, and that affects writers’ reach and revenue.

Is it all doom? Not quite. AI platforms (including chatbots) are becoming referral sources, and some outlets see steady search referrals overall. The direction is messy. The safe bet for writers is clear attribution, memorable expertise, and distribution that doesn’t rely on one faucet.

Your tools are moving closer to you—right into your OS

This year and next, writing aids won’t live only in your browser tab. Apple Intelligence adds system-wide Writing Tools across Mail, Messages, Pages, and more—tone suggestions, summaries, rewrites, all designed to run privately when possible, with disclosure when ChatGPT is used. Microsoft is bundling Copilot deeper into Windows and Microsoft 365, with new features to spin up Word docs and slides straight from chat. The platform players are telling you where the writing workflow is going: closer, faster, and more embedded.

Even pricing is signaling permanence. Microsoft launched Microsoft 365 Premium for individuals at $19.99/month, positioning Copilot as a standard part of office life, not a novelty. For writers, that means your collaborators, clients, and editors will expect AI-accelerated drafts and faster turnarounds as the default.

Jobs and pay: who’s exposed, who’s protected (for now)

The IMF estimates about 40% of jobs worldwide will be affected by AI, with higher exposure in advanced economies. The ILO adds nuance: many roles won’t vanish; they’ll change, with more task re-mixing than straight replacement. For writers, that often means fewer hours spent on rote drafting and more on reporting, analysis, and editorial judgment.

Some creative labor markets feel the squeeze already. Translators report work tilting toward low-paid post-editing as clients route text through DeepL or similar tools first. Not every niche is hit equally—you can still command rates in specialized or sensitive domains—but pricing pressure is real.

On the other side, unions have drawn bright lines. The Writers Guild of America contract bars studios from using AI to write or rewrite scripts and says AI output can’t be used to undermine writers’ credits. Writers can choose to use AI, but companies can’t force it. That’s a template other creative sectors watch closely.

Copyright and licensing: the ground rules are solidifying

In the U.S., the Copyright Office reaffirmed a simple rule in 2025: copyright requires human authorship. If an AI system determines the expressive elements, that material isn’t protected. Human-guided contributions can be protected, case-by-case, and applicants must disclose AI elements when registering. Courts have backed this, and a fresh petition to the Supreme Court is testing the edges again. For writers, keep receipts on your role—outlines, edits, notes.

In Europe, the EU AI Act is phasing in obligations. Some prohibitions kicked in February 2025; governance rules and obligations for general-purpose AI models began August 2025; broader requirements land in August 2026, with more to follow for high-risk systems. For publishers and toolmakers, transparency and documentation obligations are becoming table stakes. That bleeds into how your content is labeled and traced.

Meanwhile, money is moving. News Corp, the Financial Times, and Axel Springer all announced licensing deals with OpenAI; AP had one earlier. Reddit and Stack Overflow inked training-data agreements. On the litigation front, Anthropic agreed to a $1.5B settlement with authors over training data. Whether you cheer or cringe, the direction is obvious: more formal deals, more payment for inputs, more clarity about who gets compensated.

Big platforms are pushing disclosure. YouTube now requires creators to flag realistic AI-generated content and says it will label such media for viewers. This isn’t a niche policy; it shapes audience expectations across the web. Add in content-provenance standards like C2PA and corporate watermarking efforts, and you can see where we’re headed: clearer trails about “who made what.”

The detection myth: why you shouldn’t hang your career on a score

Let’s say it plainly: “AI detectors” aren’t reliable enough to arbitrate careers. OpenAI sunset its own classifier, noting it mislabeled text and struggled with short passages. University studies echo the point: false positives and false negatives remain common, and English-language learners are hit hardest. Some institutions have paused detector use or advise caution. If you’re a writer or editor, favor process transparency and source documentation over secret scores.

What a writer’s next-12-months workflow looks like (for real)

Here’s the thing: the best setup sounds almost old-fashioned.

You start with reporting or research—your sources, your interviews, your own notes. You ask an assistant (Copilot, Claude, Gemini, ChatGPT, Apple’s Writing Tools) to summarize briefings, outline angles, and suggest structure. You keep your voice; you use the tool for speed.

You draft, knowing you’ll fact-check every claim and add citations. You use the assistant for language cleanup, clarity passes, and style checks. Then you run a manual logic audit. Does it flow? Are the numbers supported? Are quotes accurate and short?

You also write with search in mind, but not as a puppet. Google’s public line is consistent: reward helpful, reliable, people-first content. That means original reporting, first-hand insight, actual expertise—and yes, clean headings and good formatting. If your piece reads like it was written to impress a robot, you’ve already lost.

Finally, you label when AI helped and how. Readers don’t need your entire toolchain, but a sentence like “Edited with AI for clarity; all facts and quotes verified by the author” builds trust in a way a bland “written by AI” badge never will.

Traffic strategy without the hand-waving

Search results will keep answering more questions without a click. Reports suggest zero-click rates have climbed in the AI Overviews era. Some publishers are suing; others are optimizing for features that still send traffic. Either way, writers can hedge by growing direct channels—newsletters, communities, podcasts—and by writing with enough original value that your work gets cited by the very tools compressing the web.

And keep in mind: even the big players are adjusting. Google says its updates target junk, not the use of AI per se, and it continues to tweak Overviews after those early viral flubs. The lesson for writers is consistent: if your work is the source others must cite, you’re insulated from format churn.

Inside newsrooms: policies and the fine print

Legacy media aren’t sitting idle. Reuters has a public AI policy emphasizing accuracy, transparency, and human oversight. AP has long used automation for formulaic earnings reports and says it freed about 20% of reporter time for higher-impact stories. In the last year, several outlets faced embarrassment pulling AI-written or AI-assisted pieces that didn’t meet standards, reminding everyone why guardrails matter.

Public trust is delicate. The 2024 and 2025 Reuters Institute reports show people are more comfortable with AI “behind the scenes” than up front. If you’re a newsroom leader, that’s your north star: helpful augmentation, human accountability.

Okay, but what about money—especially for freelancers?

There’s pressure. Some clients expect lower prices for routine work that AI can churn. Others now pay more for on-the-record reporting, named experts, and distinctive analysis. Portfolio value drifts toward verifiable expertise—bylines, case studies, research threads, source lists. The writers who win tend to show proof they’re more than a phrasing engine. And in markets like translation, the shift to post-editing is pushing pros to narrow their niche or change the value proposition.

One more market signal: consulting giants say AI skills are now baseline. BCG expects a large share of revenue from AI services and reports heavy internal adoption. The knock-on effect for writers in corporate settings is clear: your peers use AI; your output is measured against theirs.

The near-term rulebook: practical and enforceable

- Human authorship still rules for copyright. If AI determines the expression, you can’t claim it. Document your role. Keep drafts. Disclose AI-assisted parts when registering creative work in the U.S. (U.S. Copyright Office)

- Platform policies are drifting toward clear labels. YouTube asks creators to disclose realistic AI media; expect more platforms to follow. Treat provenance data and content credentials as part of your publishing checklist. (MIT News)

- EU compliance is coming in phases. If your writing life touches European audiences or clients, expect model providers and, in some cases, publishers to satisfy transparency obligations through 2025–2026. You’ll see more standardized disclosure and safety docs cascading down the stack. (Digital Strategy)

- Licensing is getting normalized. Publishing deals with AI companies, data partnerships, and (yes) settlements are mapping real payment paths. That can increase demand for writers who originate data-rich work others want to license. (AP News)

A writer’s playbook for the next 12 months

Keep it simple, and keep receipts.

- Use AI where it’s strong: outlining, summarizing transcripts, variant headlines, grammar passes. Verify every claim, and add your own reporting to lift the ceiling on quality. The productivity upside is real when you stay inside that “frontier.”

- Write for humans first. Google doesn’t penalize you for using AI. It penalizes unhelpful content. If a reader can’t learn anything new from you, neither can a ranking system.

- Label responsibly. Not because a detector might flag you, but because readers reward honesty. Detectors remain unreliable; process transparency earns trust.

- Go direct. Newsletters, communities, courses—anything that shortens the path to your readers. With zero-clicks rising, owning even a small channel matters.

- Track your role. If you may register your work, note what you did versus what the tool produced. Preserve versions. It’s good craft and good compliance.

What to watch through 2026

Policy first: EU timelines keep advancing, with broader obligations in 2026. Platform rules will harden around labels and provenance. Lawsuits over training data will keep turning into licenses or settlements. In parallel, operating systems will grow friendlier for writers—fast, embedded tooling; cleaner privacy defaults; tighter integrations with mail, docs, and calendars. That changes habits as much as headlines.

And search? Expect fine-tuning. Google continues to claim it’s cutting low-quality content, not attacking AI itself, while publishers measure the cost of zero-click experiences and push for compensation. Writers who cultivate a clear voice, cite sources, and build direct relationships will feel these tremors least.

The immediate future favors writers who use AI without letting it decide. Let it speed the busywork. Keep the reporting and the judgment. Be explicit about your process. Publish where people read you on purpose, not by accident. And write pieces so distinct that even AI needs to point back to you.

That’s not nostalgia. That’s the path through the next year with your voice intact—and your calendar not crying.