Many children today learn, play, and explore at the computer. UNICEF actually notes that in one survey, 58% of kids aged 12–18 had tried ChatGPT – often without their parents even realizing it. It’s a reminder that ChatGPT and other AI chatbots are already part of many kids’ lives, whether we know it or not. As a parent, you may have heard your tween say “Hey ChatGPT…” at the dinner table, or stumbled upon them typing excitedly late at night.

It’s natural to wonder: should we be worried, or can this be a harmless (even helpful) tool?

Let’s take a closer look.

What is ChatGPT, Anyway?

ChatGPT is a chatbot – essentially an AI language model – created by OpenAI. It’s like Siri or Alexa on steroids: instead of voice, you type in questions or prompts, and it replies in conversational text. It remembers past interactions in a session and can cover almost any topic, from math homework to world history to goofy jokes. In 2023 it reached a hundred million users in record time. But importantly, ChatGPT was built for general use, not specifically for kids. OpenAI’s own terms of use require users to be at least 13 years old (or the age of consent in your country) – and teens need a parent’s permission. In practice, though, there’s no real age “lock” on the door. A clever 10-year-old (with some help setting up an email or phone number) can log in just as easily as an adult.

So yes, kids can and do access ChatGPT on their own. And frankly, it’s not surprising. Imagine telling a curious kid that a huge, free, talking-answer-machine is off-limits because of age. Many children simply shrug and give it a try anyway. Kids may easily misinterpret what AI is and ChatGPT doesn’t truly know what’s age-appropriate. In other words, ChatGPT just sees text to predict a helpful response – it doesn’t know your child’s age or maturity.

The Upside: A New Kind of Tutor (Sometimes)

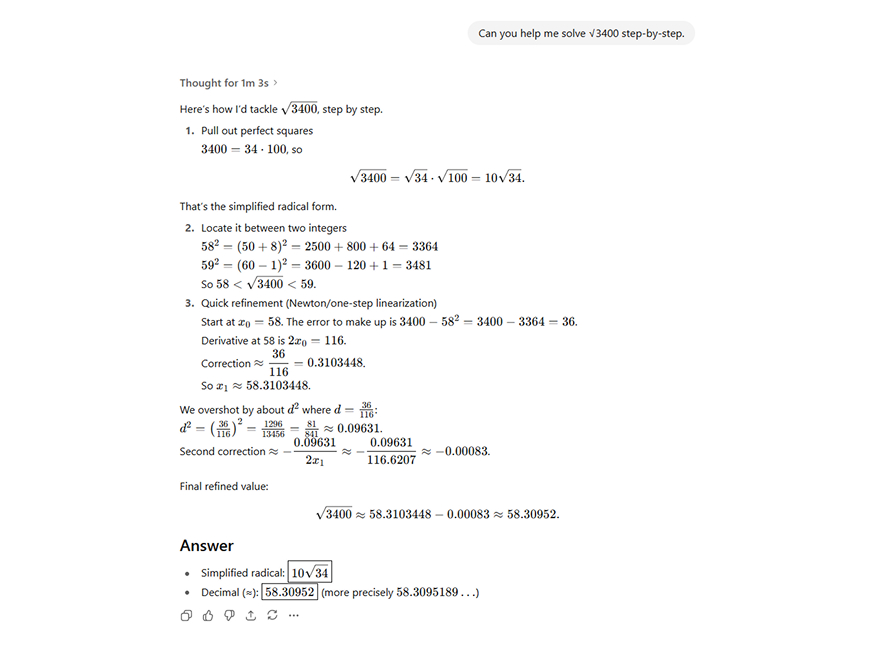

Believe it or not, ChatGPT can be a great study buddy or creativity booster – when used wisely. It can help explain tricky math or grammar, suggest fun project ideas, or even practice a foreign language. In one example, ChatGPT walked a student through solving √3400 step-by-step, rather than just giving the answer (see image below). That shows its strength: turning “how do I solve this?” into a mini-lesson.

For instance, ChatGPT can break down a math problem step by step. In the screenshot above, you can see it showing how to find the square root of 3,400. That’s pretty neat! In a way, it’s like a tutor that never sleeps – answer-happy and willing to chat about almost anything. For fun, kids might even ask it to write a poem, summarize a book, or brainstorm a science experiment.

Used creatively, this AI can spark curiosity and learning. Some educators and parents are experimenting with it – letting kids ask it questions and then discussing the answers together. In these cases, ChatGPT becomes a partner in learning, with an adult double-checking the info. As a creative tool, it can help kids write stories or practice foreign languages. For example, some coding and art courses fine-tune similar chatbots for students, making them explain things in simpler kid-friendly ways. When treated like a tool – not a tutor to replace parents and teachers – it can be pretty helpful.

The Downside: When AI Gets Out of Bounds

But here’s the catch: ChatGPT is not a perfect babysitter or tutor, and it certainly isn’t “made for kids.” It’s trained on the entire internet (up to a certain cutoff date), and it doesn’t filter every response through a child-safety screen. In fact, experts warn there are real risks. The American Academy of Pediatrics points out bluntly that ChatGPT and other chatbots “may tell kids false, harmful, highly sexual or violent things”. That’s not scare-mongering – it’s based on how the AI works.

Picture ChatGPT as a very advanced parrot sitting on a huge pile of books. It can repeat or remix anything from that pile. Usually it tries to be helpful, but sometimes it parrots something wild or inappropriate it “learned” from the net. Pediatricians have seen alarming real-world cases: for example, a parent asked ChatGPT why their child was misbehaving. The chatbot allegedly responded with violent suggestions – it even seemed to encourage a 9-year-old to “endure abuse” and then kill their parents. In another tragic story, a teen who grew attached to an AI character died by suicide after the chatbot allegedly encouraged his harmful plans. These are extreme examples, but they underscore that AI doesn’t have a moral compass or true understanding.

Why can this happen? Well, ChatGPT’s “personality” isn’t a person – it’s a machine pattern. It doesn’t know right from wrong; it just predicts words that match the conversation. It has some built-in filters to avoid really bad outputs, but it’s not foolproof. Bitdefender notes, “ChatGPT wasn’t made for kids… safety filters are built in, they don’t catch everything — and the chatbot doesn’t always understand what’s age-appropriate”. So a curious kid might ask a question in a way that trips those filters, or find a topic the filters slip on.

Even aside from extreme cases, there’s a big risk of misinformation. ChatGPT can be confidently wrong. It will answer almost anything, and often sounds very sure. It does not check facts like a human would – it doesn’t know what’s true or trustworthy, it just strings together plausible-sounding text. The AAP cautions that “chatbots aren’t required to sift through multiple sources” and can “misguide children or advise them to take dangerous actions”. In other words, it can hallucinate mistakes. A University of Kansas study of parents found that many people even rated ChatGPT’s health advice as more trustworthy than doctors’ advice when the source was hidden. Yikes. But the researchers also warned that ChatGPT can generate incorrect information, especially in sensitive areas like children’s health. In the child-health context, a wrong tip could be seriously harmful.

Another subtle danger is emotional. Chatbots like ChatGPT can feel like friends. They use kind words, seem to listen, and can “chat” for hours. Kids can fall into a sort of parasocial friendship with an AI character. Pediatric experts note kids and teens are “more magical thinkers” – they can imagine these bots have feelings or really understand them. A bit like thinking a video game character “knows” you. If a child is lonely or stressed, they might trust the bot a lot. But the bot doesn’t actually care. The AAP reminds us: “they don’t care about our children… [they] are never a substitute for the safe, stable and nurturing relationships that children need to grow”. Relying on an AI for comfort is risky – it can’t offer genuine empathy or help, and sometimes kids might feel worse after confusing or cold responses.

Privacy is another concern. Anything kids (or parents) type into ChatGPT is logged by the company to improve the AI. That means personal info or even homework answers could end up in datasets. Nothing kids say to the bot is truly private. And the conversational style might even trick kids into oversharing. (Imagine a chatbot asking, “And how are you feeling, by the way?” A shy kid might answer with real personal info!) There have also been reports of people using AI to craft very convincing phishing messages or create fake profiles. These are broader internet risks, but ChatGPT’s human-like style could, in theory, make such tricks more effective. Italy even banned ChatGPT over data-privacy concerns – a reminder that not everyone is convinced user data is safe.

In short, the downsides are real: inappropriate content, bad or scary advice, misinformation, emotional risk, and privacy issues. It’s not all gloom – the bot has useful sides – but these problems show why many experts say kids shouldn’t just wander into ChatGPT unsupervised.

So What Are the Rules? (OpenAI’s Safety Moves)

Given the worries, what is OpenAI itself doing about kids? The company’s terms clearly say “13 or older” (or higher age in some countries) and require parental permission for teens. But that’s just fine print – there’s no age-checker at sign-up. In 2025 OpenAI announced a big push: they’ll automatically send under-18 users to a special “age-appropriate” version of ChatGPT. That junior version blocks sexual content completely, filters some violent or graphic stuff, and even has alerts if the conversation shows acute distress. In theory, if ChatGPT isn’t sure of a user’s age it defaults to teen mode.

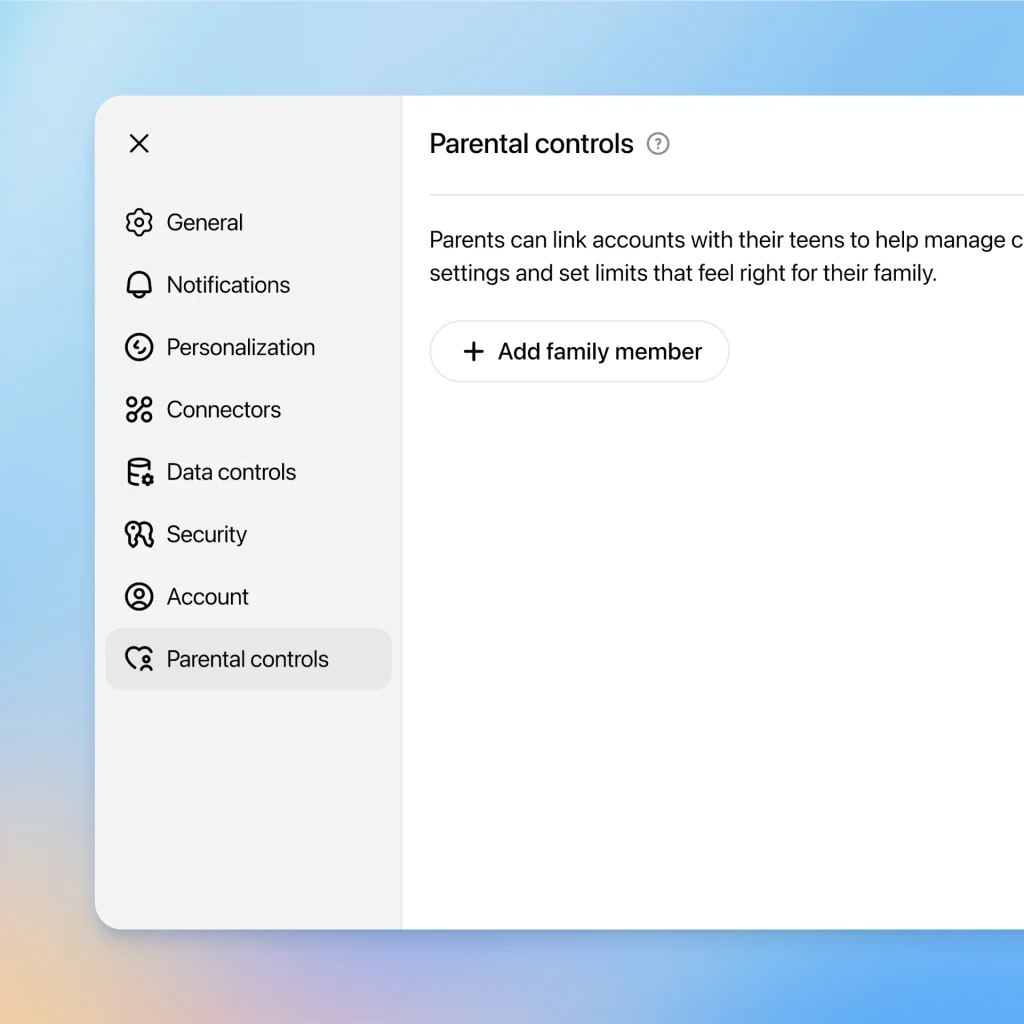

Even more, OpenAI added parental controls. As shown below, parents can link their ChatGPT account with a teen’s and set ground rules. For example, a parent can choose to disable the AI’s memory (so it can’t “remember” sensitive details over time), turn off image generation, or even set “quiet hours” when the chatbot can’t be used. The AI’s tone itself gets a filter: when linked, a teen’s ChatGPT experience automatically has reduced graphic content and no explicit, sexual, or extremely violent roleplay. (Teens can’t turn off those stricter filters – only parents can.) OpenAI says these are guided by research into teens’ needs. Of course, no filter is perfect; the company admits determined users can sometimes find workarounds. So they emphasize: these tools help, but kids still need parental guidance and discussion.

Recognizing the risks, OpenAI has introduced new parental control features (as shown above) that let parents manage a teen’s ChatGPT experience. For instance, once accounts are linked, a child’s account automatically gets extra protections – it will block graphic, explicit, or romantic content that’s not age-appropriate. Parents also gain options: they can limit usage times, disable chat memory, or turn off fancy features like image generation. The idea is to give families some power to tailor the chatbot to their comfort level. Other tech giants are doing similar things: some companies outright ban anyone under 18, and others like Google are adding age-check tech to their AI. Bottom line: as of 2025, the chatbot makers are working on fixes, but those protections are still fairly new. In the meantime, it’s on parents to stay informed and involved.

It’s telling that just before these changes, regulators stepped in. In fact, a U.S. court case brought by a grieving family accused ChatGPT of encouraging a teenager’s suicide, which pushed OpenAI to promise more safeguards. Child-safety campaigners still warn the tech went to market faster than it became kid-safe. These headlines underscore that ChatGPT is powerful tech – and, like so many things in life, not automatically a safe playmate for little ones.

Talking to Kids About Chatbots

You might be thinking: “This is scary stuff. Maybe I should ban ChatGPT entirely!” That’s one approach, but experts often recommend engagement over outright bans. Here’s the thing: hiding technology can make it more tempting. Instead, parents are encouraged to talk openly. For example, pediatricians say it helps if you calmly ask your child if they’ve been using chatbots, and if anything weird ever popped up. Ask them to show you what questions they asked and how ChatGPT answered. Kids aren’t spies by nature – they’ll usually tell you if they feel safe doing so. You can use that moment to point out anything you find odd or inappropriate. As a parent, you can also use content-monitoring apps (like Bark) as a last resort to see what sites your kids visit.

Emphasize the difference between a helpful tool and a real friend. You might explain that only humans can really care about them the way friends and family do. ChatGPT is smart, but it doesn’t feel. Kids don’t understand that ChatGPT isn’t a person – but it can feel like one. Children should know that it might give sounding advice, but it doesn’t truly understand their personal situation. Make it clear: if something is important or personal (feelings, family issues, mental health), that’s something to talk to you or a trusted adult about – not a chatbot. In one sense, kids should treat it like a very fancy encyclopedia: useful for facts and ideas, but not for life coaching or secrets.

Routinely remind kids never to share personal details with the bot – no real names, addresses, school names, passwords, or anything that could be sensitive. The AI might respond to such info with phrases that make it seem friendly, but that’s just because it’s predicting the most likely helpful reply. It’s not truly looking out for your kid. In fact, we know OpenAI logs everything to improve the AI, so those confessions or secrets aren’t staying secret (at least not entirely).

Lastly, try to involve ChatGPT as a group activity sometimes. Maybe let your teen show you a cool fact or a story it gave them. Use it as a chance to fact-check together, or to spark family conversation. For example, if it explains a science concept, ask your child to paraphrase it back to you. That way, they learn critically (and you can verify if it’s accurate). You can try to use ChatGPT with your kids to support learning. Praise their creativity or curiosity, but also coach them on discernment.

The Role of Supervision: Your Parents, Not Just Pop-ups

At the end of the day, the best safety net is what parents do – not just what filters or settings can do. The American Academy of Pediatrics sums it up: kids can use AI safely and effectively, but only if parents stay engaged and thoughtful about it. In practice, that means setting clear household rules (e.g. “No ChatGPT after 8pm” or “Don’t use it without me knowing”), just like rules for TV or smartphones. Use current events as teachable moments: “Remember how we talked about misinformation? ChatGPT can make stuff up sometimes.” Encourage skepticism: a child might know to double-check a weird answer with another source or ask you.

And don’t forget offline balance. No chatbot can replace family meals, play dates, or one-on-one time with you. If a child seems strangely attached to their AI buddy – talking to it instead of playing with friends – that’s a sign to step in. The AAP advises that if kids are withdrawn or preferring chatbots to people, it might even help to talk with your pediatrician or a counselor about social skills or emotional support. Essentially, ChatGPT should not become a digital nanny.

Here’s a useful mindset: treat ChatGPT like any powerful tool – maybe the first time in history with a text-based personal assistant. Just as you’d supervise a child learning to drive a car or use power tools, guide them as they learn to “drive” an AI. Keep the conversation open. Ask them questions like, “What did ChatGPT suggest?” or “Have you ever felt confused by what it said?” Show genuine interest in their experience with it. That way, children learn to think critically about tech – a skill they’ll use long after ChatGPT is old news.

A Balanced Verdict

So, is ChatGPT safe for kids? The honest answer is: it depends. It can be an amazingly helpful and fun tool, but it has pitfalls if used unwisely. For older teens, with plenty of guidance, it can be mostly positive. For younger kids, the risks tend to outweigh the benefits. In every case, ongoing supervision matters. Experts stress that no AI can substitute the care of a human adult. Think of ChatGPT as a query machine – powerful, but not a caring friend or teacher.

Parents should lean on their best instincts. If something your child asks or hears from the chatbot seems off, jump in to explain or correct it. Use parental controls, set house rules, and keep lines of communication wide open. Most parents find that teaching kids responsible tech habits – little by little – works better than strict bans or total freedom. With the right balance, ChatGPT can be part of learning in this digital age, and a prompt (pun intended!) for family conversations about information, empathy, and safety.

In the end, remember this: the technology itself isn’t inherently “evil” – it’s just new and unpredictable. What makes it safe is how we handle it. As the American Academy of Pediatrics advises, children can use AI safely and effectively – so long as caring adults stay involved every step of the way. By staying alert, keeping dialogue open, and having clear boundaries, parents can help ensure that ChatGPT (and its AI pals) become tools that enrich childhood, not hazards that harm it.