Norway went beyond platitudes and put targets in writing. The national digitalisation strategy says the public sector should use AI to build better services and work more efficiently, with a clear quantitative aim: “Goal 2030: All government agencies use AI… Currently, 43 percent do.” That’s from the government’s own strategy document, published in late 2024.

If you want the longer arc: Norway’s 2020 National AI Strategy laid the groundwork and picked focus sectors—health, oceans, public administration, energy, mobility—while tying everything to ethics and privacy. It’s not a “move fast” plan; it’s a “move fast inside guardrails” plan.

Two big moves in 2025 locked in the rulebook.

First, the government decided Nkom (the Norwegian Communications Authority) will coordinate AI supervision, making sure EU AI Act rules are applied consistently in Norway. That’s the official line:

“The Government has decided that the Norwegian Communications Authority (Nkom) will be the national coordinating supervisory authority for AI.” (Regjeringen.no)

Second, Norway is standing up KI-Norge (AI Norway)—a national hub inside the Digitalisation Agency (Digdir) to help the public sector and industry use AI responsibly and well. Think playbook, not police. The government frames it as a “national arena for innovative and responsible use of AI.”

Zoom out and you see a broader accountability ecosystem. Datatilsynet (the data protection authority) runs a Regulatory Sandbox for AI, explicitly to “promote the development of innovative solutions” that are “ethical and responsible.” That’s not marketing copy; that’s the regulator’s description. They even brought generative-AI pilots into the sandbox in 2024.

And yes, debates are live. Norway’s DPA has recently pushed for stricter limits on remote biometric identification, reflecting a cautious stance on face recognition in public spaces. The direction of travel is clear: permission, not forgiveness.

At the front desk: chatbots that actually do work

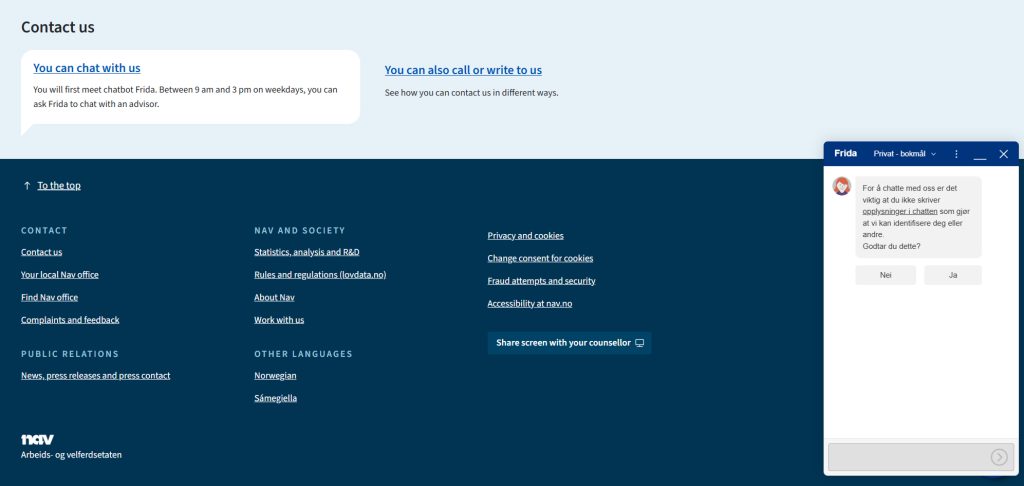

If you’ve used NAV (Norway’s Labour and Welfare Administration), you’ve met Frida, the 24/7 chatbot. NAV says it straight on its contact page: “You will first meet chatbot Frida,” who’s always on and can hand you to a human during office hours. During the pandemic, Frida handled a massive spike and deflected human workload at scale, according to multiple case write-ups.

Municipalities have their own workhorse: Kommune-Kari. There’s peer-reviewed research analyzing thousands of real citizen–bot dialogues, noting the bot’s rollout across 100+ municipalities and how it steers residents to the right services. Vendor pages boast big numbers, but the research is the important bit: it shows how citizens actually use the thing and where it helps (and where it doesn’t).

And the state platform Altinn—the plumbing for digital public services—now ships its own “Altinn Assistant” to help developers navigate the ecosystem. It’s not flashy. It’s the kind of tool that shaves hours off a build and keeps documentation out of your way.

A lot of public AI is quiet automation. The Tax Administration talks about using data and AI for targeted audits and “embedding compliance into systems,” which is bureaucrat-speak for nudging people to do the right thing and catching issues earlier. It’s not Minority Report; it’s more like guardrails and triage.

On the immigration side, UDI has experimented with automation and now runs a public-facing AI chatbot. They’re explicit about the privacy posture: “When you interact with the chatbot, your message is processed by an AI-based language model. What you write is not used to train or improve the model.” That’s the tone across Norway’s public AI: use it, but say how—and draw the line in the sand.

In the hospital: AI that finds cancer and speeds up critical calls

This is where the receipts get compelling. Norway’s national breast-cancer screening data has been used in multiple Radiology/RSNA-published studies. Large-scale results show AI can flag the vast majority of cancers and potentially reduce radiologists’ workload by triaging low-risk images. We’re not talking small pilots; we’re talking over a hundred thousand exams in peer-reviewed work.

Emergency medicine is moving too. The AISMEC project in Helse Bergen is building AI decision support for emergency call-handlers—think faster, more accurate triage in suspected stroke, where minutes matter. That’s research, not a national rollout yet, but it’s a good example of Norway testing AI where the benefit is obvious and the stakes are high.

Norway’s health authorities have also published a joint AI plan for 2024–2025, calling it a “holistic plan aimed at ensuring the safe and effective use of artificial intelligence in the health and care services.” Again, the theme: permissioned progress.

Language, infrastructure, and the sovereign stack

Norway is investing in Norwegian-capable models and datasets. The National Library’s NB AI-lab releases language tech (like NB-Whisper for Bokmål/Nynorsk speech recognition) and maintains Språkbanken, a deep reservoir of machine-readable Norwegian text and speech. Universities are pushing NorLLM, arguing Norway needs models trained on Norwegian data and values.

On compute, the headline is private-public gravity. In July 2025, Aker and Nscale—with OpenAI as a partner—announced “Stargate Norway,” a $1B AI facility running on renewable power, aiming for 100,000 NVIDIA chips by end-2026. Whatever your take, that’s the kind of capacity that pulls research and industry toward one geography.

If you’re wondering how ministries and agencies “do AI” without going off the rails, Digdir has published practical guidance for ethical AI in the public sector—including transparency expectations, risk assessment, and even specific advice for generative AI. It’s openly labeled “open beta,” which I like; it sets the expectation that the advice will evolve as the tech does.

And the culture is shifting. Digdir reports over half of Norwegian public entities now use AI in their day-to-day, but only a quarter have converted time-savings into real budget savings. Translation: the tech is arriving faster than the management reforms. That’s fixable, but not automatic.

So… is AI “running” Norway?

Bits of it, yes—and increasingly the connective tissue. You meet AI at the front door (chatbots), it helps civil servants triage cases, radiologists read exams faster, emergency services spot strokes sooner, and supervisors lay out the rules of the road so trust isn’t an afterthought. Norway’s approach isn’t flashy. It’s disciplined, citizen-first, and relentlessly documented.

If you’re leading a team in the public sector, here’s what to actually do next: pick one high-volume citizen interaction and instrument it with a clearly governed AI assistant; publish your model card and your red lines on day one; and assign a human who owns the outcomes, not just the model. When that’s steady, move a second workflow—ideally where the evidence (like the screening studies) already points to real impact.

That’s how Norway’s doing it. And that’s why it’s working.