I don’t have a crystal ball. You probably don’t either. Still, we have signals—surveys, forecasting markets, and the people who build this stuff telling us what feels hard. If you’re trying to aim your career, a product roadmap, or just your expectations, let’s stitch those signals together without pretending to be certain.

“AGI” carries baggage, so I’ll anchor to two ideas that come up in surveys:

High-Level Machine Intelligence (HLMI): machines can do every task better and cheaper than humans, in principle. In the 2023 AI Impacts survey of thousands of AI authors, the aggregated forecast put a 50% chance of HLMI around 2047—13 years earlier than their 2022 read. That’s a big shift, and it’s based on researchers’ own distributions.

Full automation of jobs: all occupations replaced rather than just their tasks. That arrives later in most surveys because reality—regulation, hardware, culture—lags feasibility. The exact number varies, but in general it’s far beyond the HLMI date, which matters for societal change but is less useful for timing a capability crossing.

That’s the academic lens. Outside the journals, forecasters tend to be earlier.

What the forecasters are betting on

Metaculus, a platform with a track record on near-term events, has a community median for “Date of Artificial General Intelligence” around mid-May 2030, with the middle half of predictions landing from late 2026 through early 2034. The definition is crisp on their site and the question is actively maintained, which helps reduce semantic drift.

A synthesis from 80,000 Hours earlier this year looked across forecasters and summarized it this way: roughly 25% by 2027 and 50% by 2031 as of late 2024. You can quibble about definitions, but the direction is clear—timelines among forecasting communities have marched earlier.

So we already have a spread: researchers’ median sits in the 2040s; forecasting markets lean toward the early 2030s, with real mass on the late 2020s.

A quick reality check from compute

Progress isn’t just vibes. Epoch AI’s data shows training compute for frontier systems has been growing around 4–5× per year, at least through mid-2024. That’s an astonishing rate, powered more by buying more chips and wiring them together than by single-chip breakthroughs. If that keeps up—even imperfectly—capability jumps don’t feel far-fetched.

Industry leaders talk timelines, too. Nvidia’s Jensen Huang said in 2024 that by some definitions—think “passing a broad basket of human tests”—we could get there within five years. It’s not a universal definition, but it signals where a key supplier of compute thinks the puck might go.

Now, that’s the backdrop. Let’s talk Dwarkesh.

What the new Dwarkesh Patel episode argues

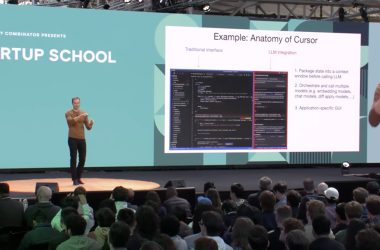

Dwarkesh just released a long-form interview with Andrej Karpathy titled, yes, “AGI is still a decade away.” It’s not clickbait. The episode lays out a concrete view: this is the decade of agents, not the year of agents, because the current systems have big holes to patch—computer use, memory that sticks, true multimodality, and robust self-improvement loops. Karpathy’s point is simple and a little humbling: these are tractable problems, but they’re still hard, and grinding through them takes time.

A few takeaways that matter for timelines:

- Reason for “a decade”: Karpathy says today’s agents don’t “just work” as reliable coworkers. They need to be better at using computers, learning on the job, and keeping useful context over time. Those aren’t cosmetic upgrades. They’re structural. That’s why he thinks it’s a years-long slog rather than a product sprint.

- The model core may shrink: He floats a striking idea: with better data and distillation, the “cognitive core” doesn’t have to be giant. Smaller, smarter cores plus retrieval for facts could be the path. That doesn’t shorten the path by magic, but it reframes what to optimize.

- Economic framing: He suggests that even big capability gains may look like they “blend” into steady growth—less fireworks, more compounding. That’s a useful antidote to headline hype and a reminder that deployment frictions can soak up a lot of the shock.

The episode doesn’t say “slow down.” It says “these particular bottlenecks take real engineering cycles.” If you’ve spent time trying to wire an LLM into your daily stack, that probably rings true.

In June 2025, Dwarkesh wrote “Why I don’t think AGI is right around the corner,” and he made a call that still feels grounded: today’s models struggle with organic, on-the-job learning. Humans collect little lessons, adjust, and keep going. Models, as deployed, don’t. He expects progress, but he thinks we’ll get warning shots before we get systems that truly learn like people.

He also offered concrete milestones (which I love, because milestones are easier to argue about than vibes). He’s roughly 50/50 on a smart system that can handle a complex, week-long office task—like wrangling small-business taxes across websites and email—by 2028. He puts human-like “learn on the job” behavior around 2032. And he notes something that should be on a sticky note above every GPU rack: the compute scale-up we’ve ridden “can’t keep going past this decade,” so if we don’t find better methods, timelines could stretch.

Here’s the mental model he uses: the distribution over AGI arrival is very skewed. Lots of weight in the next few years, but if we miss that wave and compute plateaus, the annual probability may fall for a while. That’s not a prediction of stasis—just a warning that “this decade or bust” is a real possibility.

If you pair the essay with the Karpathy interview, you get a consistent story: exciting capability jumps, plus sober bottlenecks in agents, memory, and learning.

So… when is AGI likely?

Let me commit to clear numbers while staying honest about uncertainty.

- Most likely window for an HLMI-style capability: 2030 to 2047. The early edge here mirrors the Metaculus median and the thick chunk of mass in the late 2020s through early 2030s. The late edge reflects the 2023 researcher median for HLMI. Note how wide those are; that’s not me hedging—it’s the field disagreeing in public.

- Non-trivial early odds: There’s real probability on an arrival before 2030. Metaculus puts the lower quartile around late 2026, and several forecaster syntheses put 25% by 2027. That’s not a fringe number. It’s a community median speaking.

- Why not call it sooner? Because the Karpathy-style bottlenecks look like years of engineering and data work, not months. And because survey medians still sit in the 2040s, which we shouldn’t wave away.

If you’re making plans, treat 2030–2047 as the main band, with an earlier tail worth preparing for and a long tail if full job automation (not just task ability) becomes your bar.

What could pull the date closer

Three levers stand out, and each has a very human flavor.

- More compute, faster iteration. If the training budgets keep rising and hardware plus power actually land on time, labs get more shots on goal and shorter feedback loops. That accelerates learning, even if each single model is only a step.

- Better “agent” guts. The minute we see solid, widely reproduced advances in tool use, computer control, long-horizon planning, and memory that survives contact with the real world, timelines can slide earlier. Karpathy’s “decade of agents” frames exactly this. If those pieces click, the year count compresses.

- Clearer, harder evaluations. Shared, robust tests for broad competence and reliability focus the work. Once the targets stop moving, improvement often speeds up because everyone’s rowing toward the same buoy.

A quick aside: public comments from people like Jensen Huang—“five years” under a test-based definition—matter less as prophecy and more as a signal that capital and supply chains are gearing for a sprint. Money is a forecast with consequences.

What could push it out

Plenty can slow the march without stopping it.

- Physical constraints. Power, land, cooling, and high-end chips don’t grow on trees. Buildouts slip. When they do, training cadence slows, and so does learning at the frontier.

- Safety and governance gates. If regulators require stronger pre-deployment testing or audits, or if labs self-impose “gates” between leaps, progress still comes, just with thicker guardrails.

- A missing idea. It’s possible we’ll need a few new conceptual moves—beyond more data and bigger clusters—to get robust, reliable general competence. Karpathy’s episode hints at this gently: we’re still figuring out the recipe for agents that feel like dependable teammates. That’s solvable, but it can add years.

You know what? I find this oddly grounding. We live in a season when screenshots can make it feel like the ground is moving under our feet every week. Listening to a builder say “this will take a decade,” and reading a host admit “my gut says not quite yet,” makes room to breathe—and to work. It’s okay to be excited and cautious at the same time. Most good engineering has both.

If you’re building, researching, or just trying to keep up

Keep your hands on the tools. If agents really are this decade’s story, then the teams that learn to pair them with human judgment—clean interfaces, crisp evals, smart escalation paths—will move faster when the bottlenecks relax. If you’re a researcher, the places to push are clear: computer use, memory that lasts, learning as you work. If you’re an observer, make space for both sides of the feeling—wonder at what already works, and patience for the stuff that still doesn’t.

And if you want just one line to carry into your week, try this: watch the agents. They’ll tell you when the calendar matters.